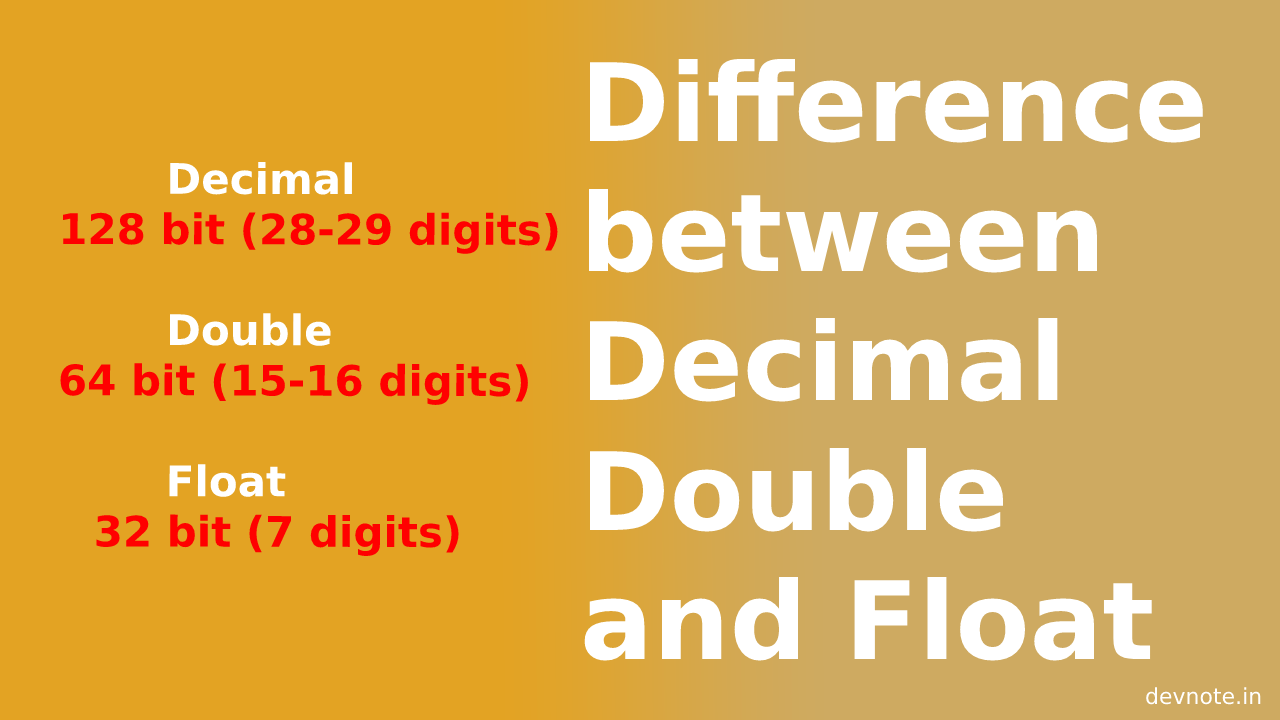

Difference between Decimal Double and Float

In this tutorial, today we will learn the Difference between Decimal Double and Float. The Decimal, Double, and Float are various types that are different in the way to store the values. Precision is the main difference where the decimal is a floating decimal (128-bit) floating-point data type, double is a double-precision (64 bit) floating-point data type and float is a single-precision (32 bit) floating.

Decimal – 128 bit (28-29 digits)

Double – 64 bit (15-16 digits)

Float – 32 bit (7 digits)

Difference between Decimal, Float and Double

The main difference is a decimal will store the value as a floating decimal point type and Floats and Doubles are binary floating-point types stored. The performance wise Decimals are slower than double and float types.

Where To Use Decimal, Double, and Float

Decimal

The decimal numeral system is the standard system for denoting integer and non-integer numbers. Decimal is financial applications it is better to use Decimal types because it gives you a high level of accuracy.

Example

DECIMAL(6,2) to store any value with six digits and two decimals.

the decimal can be stored in the range from -777.77 to 777.77.

Double

The double data type is a double-precision 64-bit floating-point. The double type is probably the most normally used data type for real values.

Example

DOUBLE(7,4) to store any value with 7 digits and 4 decimals.

the double insert 777.00009 into a FLOAT(7,4) column and the result is 777.0001.

Float

A float data type is composed of a number that is not an integer because it includes a fraction represented in decimal format. It is used mostly in graphic libraries because of the very high demands for processing powers.

Example

FLOAT(8,3) to store any value with 8 digits and 3 decimals.

the float inserts 777.000009 into a FLOAT(8,4) column and the result is 777.00001.